福祥科技(北京)有限公司首次开启世界人工智能领域文生图、文生视频、文生语音频等多模态生成模型领域的先河——天书AI多模态生成模型

2023年4月6日,福祥科技(北京)有限公司首次开启世界人工智能领域文生图、文生视频、文生语音频等多模态生成模型领域的先河——天书AI多模态生成模型

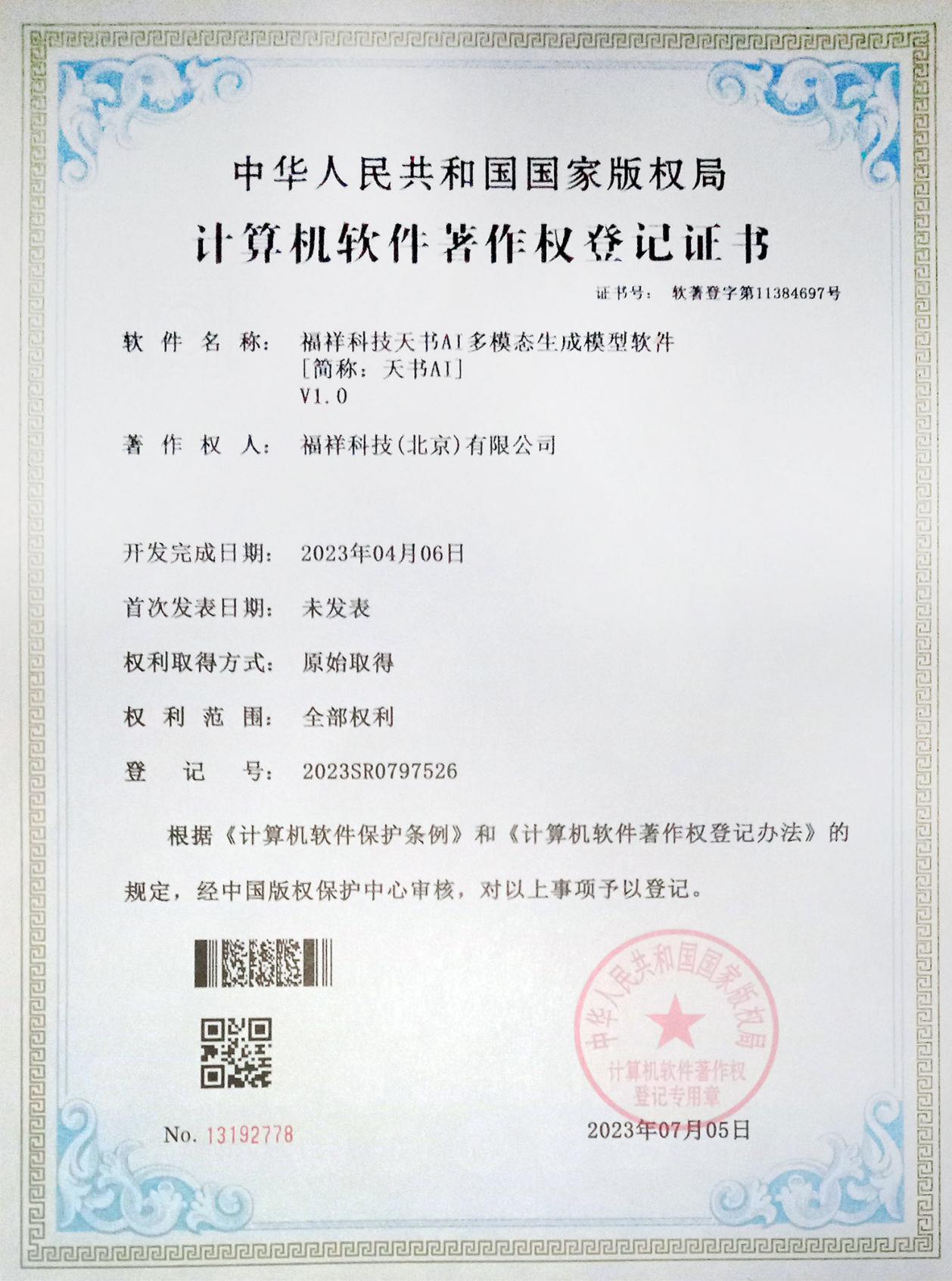

大家好!我是天书AI多模态生成模型开发者和拥有者福祥科技(北京)有限公司的总经理首席科学家杨彦增,男,联系电话19910739291,我是天书AI多模态生成模型的开发和代码编写人。我很荣幸在此向大家介绍我们处于世界领先的技术,我们首次开启了世界人工智能领域文生图、文生视频、文生语音频等多模态生成模型的先河,开启了世界首创的人工智能多模态生成模型产品——福祥科技(北京)有限公司天书AI多模态生成模型软件,简称:天书AI,国家版权软著登字:11384697号,国家版权软著登记号:2023SR0797526。

作为一位在科技领域有着丰富经验和敏锐洞察力的专业人士,我相信您对于创新技术的重要性和潜力有着深刻的理解。截至今日2023年7月16日世界范围内在同一个人工智能模型中同时拥有和支持:文生图像、文生视频、文生语音频的多模态生成模型只有两款模型,其中一个是我们于2023年4月6日开发完成的这款“天书AI多模态生成模型”,另外一个就是微软于2023年7月11日发布的人工智能模型CoDi,而我们的多模态生成模型的软著也早于微软CoDi的发布日期,已在2023年7月5日获得中国国家版权局的确认并发放了软著证书,还拥有比微软CoDi更多的生成能力,比如从文本生成语音等生成功能和更多的模态数据支持和生成,和更多的多模态融合技术,因此在多模态生成人工智能模型领域我们获得世界首创,并拥有更多的多模态生成人工智能模型软件的全部权力。

天书AI多模态生成模型是一种领先于时代的技术,天书AI多模态生成模型能够实现多模态数据的处理和生成。在当今信息爆炸的时代,我们面临着海量的文本、图像、视频和语音数据,而这些数据往往存在着相互关联和交互的关系。传统的单模态生成模型已经无法满足这种复杂多样的需求,天书AI多模态生成模型是我们团队在多模态数据处理和生成领域的最新突破。在当今数据驱动的时代,我们面临着海量而复杂的多模态数据,如文本、图像、视频和音频等。传统的单模态生成模型无法很好地处理这些多模态数据的关联和交互关系,因此我们研发了天书AI多模态生成模型,旨在为您提供一种全新的解决方案。

天书AI多模态生成模型不仅可以接受多种输入模态,还能将它们有效融合在一起进行处理和生成。它能够同时处理文本、图像、视频和语音等多种数据,并将它们转化为统一的多模态表示,从而实现更全面、更准确的信息提取和生成。无论您是需要文本生成、从文本生成图像、从文本生成视频、从文本生成语音,还是从语音生成文本,天书AI多模态生成模型都能够满足您的需求,帮助您在多个领域实现创新和突破。

天书AI多模态生成模型的这一创新技术的核心在于我们首创了更先进的智能模型架构和先进的深度学习算法及交互融合逻辑。这种深度学习技术赋予了我们的模型强大的生成能力和上下文理解能力,使其能够根据输入数据的语义和上下文生成出与之相关联的多模态结果。这意味着我们的模型能够理解您的需求,并给出令人满意的、与输入数据一致的多模态生成结果。

天书AI多模态生成模型不仅能够同时处理多种输入模态的数据,还能够将它们融合在一起进行处理和生成。无论是生成图像、生成视频,还是生成语音,天书AI多模态生成模型都能够根据输入数据的语义和上下文生成与之相关联的多模态结果。这种能力使得我们的模型具备了广泛的应用潜力,可以在多个领域带来创新和突破。

天书AI多模态生成模型还具备用户友好的用户界面,使您能够轻松地进行输入和输出操作。无论您是普通用户还是技术专家,都能够方便地利用我们的模型进行多模态数据处理和生成,为您的工作和创作带来更大的便利和效率。

我对于天书AI多模态生成模型的潜力和应用前景充满信心。我们相信,通过将多模态数据的处理和生成推向新的高度,我们能够在诸多领域实现突破和创新,如媒体与娱乐、科研、艺术创作、教育与培训、医疗与健康等千行百业提供AI生成式智能服务。我们期待与各位合作伙伴共同探索多模态生成技术的更广阔应用,并为社会的进步和发展作出贡献,并寻求投资机构合作共同开启未来AI智慧新世界。

Fuxiang Technology (Beijing) Co., Ltd. has opened the world’s first precedent in the field of artificial intelligence multimodal generative model – Tianshu AI multimodal generative model

Hello everyone! I am honored to introduce to you our world-leading technology, we have opened the world’s first multimodal generative model in the field of artificial intelligence, and opened the world’s first artificial intelligence multimodal generative model product – Fuxiang Technology (Beijing) Technology Co., Ltd. Tianshu AI multimodal generative model software, referred to as: Tianshu AI. I am Yang Yanzeng, the general manager of Fuxiang Technology (Beijing) Co., Ltd., the developer and owner of this model, 19910739291 the chief scientist of our team, the person who wrote and developed the code for this software.

As a professional with extensive experience and insight in the field of technology, I believe you have a deep understanding of the importance and potential of innovative technologies. As of today, July 16, 2023, the world has and supports the same artificial intelligence model at the same time: there are only two models of multimodal generative models that generate images, generate images, and generate speech, one of which is the “Tianshu AI multimodal generative model” that we developed on April 6, 2023, and the other is the artificial intelligence model CoDi released by Microsoft on July 11, 2023, and the soft copy of our multimodal generative model is also earlier than the release date of Microsoft CoDi, has been confirmed by the National Copyright Administration of China on July 5, 2023 and issued a software certificate, and also has more generation capabilities than Microsoft CoDi, such as generating functions such as speech from text and more modal data support and generation, and more multimodal fusion technology, so in the field of multimodal generative artificial intelligence model we have obtained the world’s first, and have more multimodal generative artificial intelligence model software full power, we have opened the world’s first multimodal generative model in the field of artificial intelligence, Tianshu AI multimodal generative model soft book registration: 11384697 number, registration number: 2023SR0797526.

Tianshu AI multimodal generative model is a technology ahead of the times, Tianshu AI multimodal generative model can realize the processing and generation of multimodal data. In today’s era of information explosion, we are faced with massive amounts of text, image, video, and voice data, which are often interrelated and interactive. The traditional single-modal generative model can no longer meet this complex and diverse demand, and the Tianshu AI multimodal generative model is the latest breakthrough of our team in the field of multimodal data processing and generation. In today’s data-driven era, we are faced with massive and complex multimodal data such as text, images, video, and voice. The traditional single-modal generative model cannot handle the correlation and interaction of these multimodal data well, so we have developed the Tianshu AI multimodal generative model to provide you with a new solution.

The Tianshu AI multimodal generative model can not only accept multiple input modalities, but also effectively fuse them together for processing and generation. It is capable of simultaneously processing multiple data such as text, images, video, and speech and transforming them into a unified multimodal representation for more comprehensive and accurate information extraction and generation. Whether you need text generation, images generated from text, video generated from text, speech generated from text, or text generated from speech, Tianshu AI multimodal generative model can meet your needs and help you achieve innovation and breakthroughs in multiple fields.

The core of this innovative technology of Tianshu AI multimodal generative model lies in our pioneering more advanced intelligent model architecture, advanced deep learning algorithms and interactive fusion logic. This deep learning technique gives our model powerful generative and contextual understanding capabilities, enabling it to generate multimodal results associated with the input data based on its semantics and context. This means that our model understands your needs and gives satisfactory, multimodal generation results that are consistent with the input data.

The Tianshu AI multimodal generative model can not only process data in multiple input modalities at the same time, but also fuse them together for processing and generation. Whether generating images, generating videos, or generating speech, Tianshu AI multimodal generative models are capable of generating multimodal results associated with the input data based on its semantics and context. This capability gives our model a wide range of application potentials that can lead to innovation and breakthroughs in multiple fields.

Tianshu AI multimodal generative model also has a user-friendly user interface, enabling you to easily perform input and output operations. Whether you are a regular user or a technical expert, you can easily use our models for multimodal data processing and generation, bringing greater convenience and efficiency to your work and creation.

I am confident in the potential and application prospects of Tianshu AI multimodal generative model. We believe that by pushing the processing and generation of multimodal data to new heights, we can achieve breakthroughs and innovations in many fields, such as media and entertainment, scientific research, art creation, education and training, medical and health and other industries to provide AI generative intelligent services. We look forward to exploring the broader application of multimodal generative technology with our partners, contributing to the progress and development of society, and seeking cooperation from investment institutions to jointly open up a new world of AI intelligence in the future.”